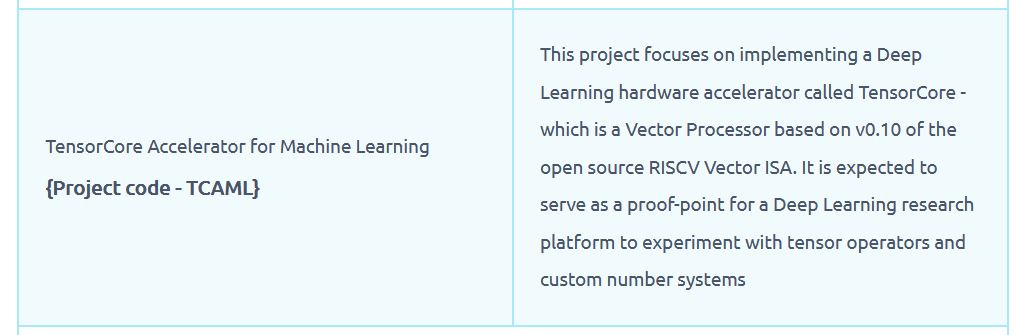

Tensor Core Accelerator HDP

Big Picture–

With anything that pertains to chip design, you tend to have an architecture design and a verification phase. The engineering that goes into verification is its own sort of skills and skills that goes into architecture and design is really a unique skill.

So, we have this split out - half are working on different designs and other half are doing verification pieces. And hopefully in near future, these pieces would come together that designers can leverage the verification pieces and vice-versa

The larger scope of the project is RISC-V is a wonderful engine to be able to experiment with, without having to ask for permission. Its used everywhere where people are doing innovation and we don’t see that stopping.

One of these applications which is really a dream for a computer architect is the deep learning space, because in deep learning, there is so much compute needed that any innovation (2%, 3%, 5%, 10%) which can be brought together and have more solutions to this problem. Deep learning is therefore an application which is very rich in possibilities and RISC-V is the processor that allows us to experiment with these ideas in a very effective way

Project Details –

What we are doing with this project is taking a processor which is available that has decent attributes with respect to how would you interface to it. We are taking a very small set of instructions from the deep learning stack that are important and we are trying to build a project around that problem. So, there’s going to be a RISC-V core and there’s going to be a RISC-V vector extension accelerator.

The different projects which we have here are 1) modifying RISC-V core so that it recognizes all these instructions to a vector accelerator 2) vector accelerator itself is decoding vector instructions and managing the execution and retirement of these vector instructions

This project also is trying to work towards an Sky130 MPW. So, we need to have these pieces together but that’s going to take quite a few people and couple of more projects. But fundamentally, the project is to build pieces of vector engine and pieces that vector engine has are vector execution machinery, then we have vector decode unit which makes sure you can recognize these vector instructions. And then we have 2 complicated pieces which are vector load unit and vector store unit

Vector load is the engine that makes requests to cache or memory and transforms that to updates of a distributed memory in the vector engine. Vector store unit does exactly the opposite – takes distributed memory across the vector engine and collates these requests or data elements in a writeable entity to the memory

Execution Strategy –

You will be sent 2 base papers 1) One is research paper which pertains to leveraging vector engines on FPGAs 2) Other one is modern RISC-V implementation of a vector engine that is tailored to ASIC design

The basic project is that we really need vector engine that can do vector scale, vector add, dot product, and one thing that this project is unique in is what we are interested in is fused dot product, which is essential in deep learning.

One of the big problems which traditional HPC engines have with respect to deep learning is that they don’t support these fused dot products. But if you look at all the chips which are out there designed for AI, they all have this feature. And it’s a problem for vector machines because it basically ties your vector lanes together. We somehow must accumulate partial results and that basically ties the vector lanes together, which you would like to be independent.

Related Posts:

- PARKinSENSE

- Shape Tomorrow’s Technology Today: ELCIA Hackathon…

- Water level monitoring and control in water tank

- The Future of Chip Design: The Next Generation is…

- Making a Game Console Using VSDSquadron Mini

- Bluetooth automated smart access

- RISC-V Mini Game Console

- Implementation of 2-bit Comparator using VSDSquadron…

- Secure Saiyan

- Home safety system